Two days ago, the Div.3 (Codeforces Round 938 (Div. 3)) suffered from severe issues of hacks, because the problem G (1955G - GCD on a grid) did not have proper validation for the sum of $$$nm$$$ in hacks, which was specified as at most $$$2 \cdot 10^5$$$ in the statements. Sure, I won't ask about why that happened, that is not constructive discussion. Instead, I will discuss about something very interesting about the task.

During that incident, I was wondering. There has to be a way to solve this without the constraint on $$$n \cdot m$$$, right? Of course, $$$7\times 10^8$$$ bytes of input is impossible anyways, but if we ignore that, $$$10^8$$$ is not a very "dreaded" number under these ages of optimizations. There has to be a way to do this.

Then the idea came to me.

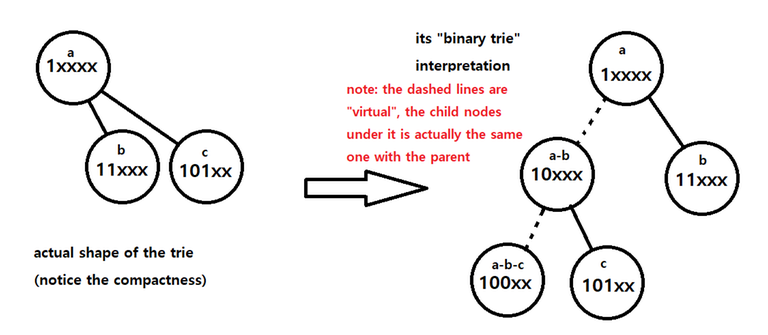

Before we cover the solution, we cover a few basic facts on number theory — it might not be necessary to know this to understand the solution, but it will be helpful. Basically, every integer is a point on the grid of infinite dimensions. Each dimension represents a prime factor, so if we restrict the domain to divisors of some integer, it becomes $$$O(\log x)$$$ dimensions because there are only $$$O(\log x)$$$ prime factors of an integer. $$$\gcd$$$ and $$$\text{lcm}$$$ becomes much more tractable to deal with on this grid, because they become simply $$$\min$$$ and $$$\max$$$ on each dimension. Same with divisibility, if each exponent on $$$a$$$ is no less than the corresponding exponent on $$$b$$$, then $$$a$$$ is divisible by $$$b$$$.

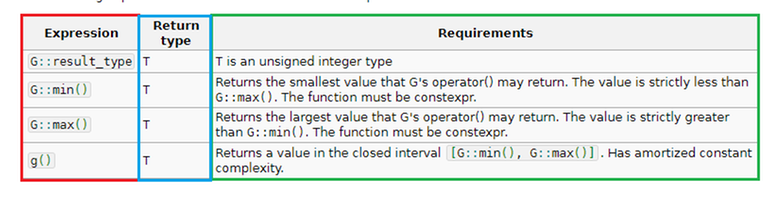

Now to the solution. The grid of divisors of $$$a_{1,1}$$$ has $$$O(\log a)$$$ dimensions and $$$d(a)$$$ points, so if we use the same idea on how one flattens a multidimensional array to one dimension, we can map each divisor (point) to their corresponding indices in one array. So, let us consider using a bitset of divisors, so each cell in the DP table can comfortably store the status of each divisor comfortably.

Let us make a bitmask for each divisor $$$mask_d$$$, defined as the union of all divisors of $$$d$$$. Let the multiplier on prime $$$p$$$ while flattening the multidimensional grid be $$$mult_p$$$ (From the facts above, one can see this is essentially the product of $$$\text{exponent}+1$$$ for all smaller primes). Then, $$$mask_1=\texttt{0000...0001}$$$, and $$$mask_d=mask_{(d/p)}|(mask_{(d/p)} \ll mult_p)$$$ if $$$d$$$ is divisible by some prime $$$p$$$. From preprocessing a sieve we have information on all such values of $$$p$$$, so this can be computed nicely as well.

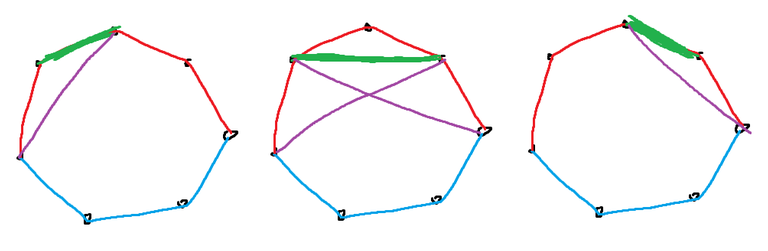

Now we assume WLOG all values in $$$a$$$ are divisors of $$$a_{1,1}$$$ (if it isn't then we can take GCD to make it so). Let $$$b_{i,j}$$$ be the $$$mask$$$ corresponding to the value of $$$a_{i,j}$$$. Then the DP transition becomes as follows —

And of course, the base condition is $$$dp_{1,1}=b_{1,1}$$$.

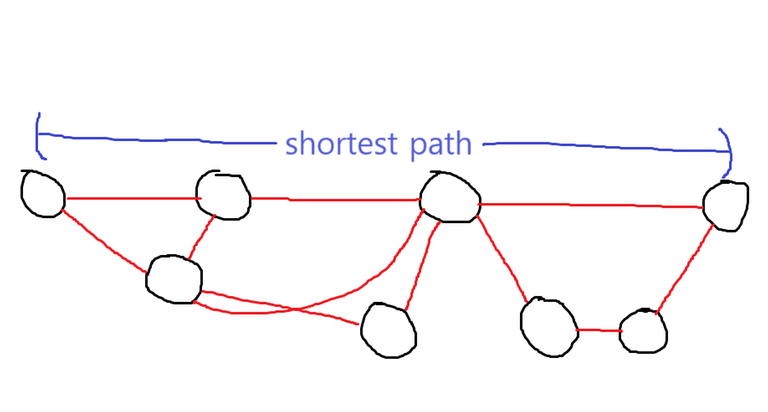

After finding $$$dp_{n,m}$$$, we can see that if $$$mask_d$$$ for some $$$d$$$ is completely contained in $$$dp_{n,m}$$$, then there exists a path whose GCD is divisible by $$$d$$$. So we try that for each $$$d$$$, and take the maximum $$$d$$$ where the divisibility condition holds.

The time complexity analysis is simple. Because it takes $$$\mathcal{O}(\sqrt{a})$$$ time to enumerate divisors of $$$a_{1,1}$$$, and processing $$$mask$$$-s takes $$$\mathcal{O}(\frac{d(a)^2}{w})$$$ time, we must use $$$\mathcal{O}(\sqrt{a}+\frac{d(a)^2}{w})$$$ time per test case. Then, there are $$$\mathcal{O}(nm)$$$ transitions in the DP, each taking $$$\mathcal{O}(\frac{d(a)}{w})$$$ time. So the DP takes $$$\mathcal{O}(nm\frac{d(a)}{w})$$$ time. Also as we did $$$\gcd$$$ for each cell, the $$$\gcd$$$ must take $$$\mathcal{O}(nm\log(a))$$$ Finally, trying the divisibility for each $$$d$$$ takes $$$\mathcal{O}(\frac{d(a)^2}{w})$$$ again, but that is already counted in the time complexity per test case so we are fine. The final time complexity required is $$$\mathcal{O}(t(\sqrt{a}+\frac{d(a)^2}{w})+\sum{nm}({\log(a)+\frac{d(a)}{w}}))$$$. Because $$$\frac{d(a)}{w}$$$ is such a small constant (precisely $$$4$$$), it should scale well for much larger values of $$$nm$$$, and even possibly run even when there were no constraints on the sum of $$$nm$$$, that is $$$\sum{nm}=10^8$$$ in the worst situation.

255932465 is the accepted submission, and the benchmark is as follows. For all cases $$$a_{i,j}=720\,720$$$ was used for all cells because that is the worst case for $$$d(a)$$$ (though $$$\gcd$$$ might be worse for other values). Only informations of $$$n$$$ and $$$m$$$ were input for each test case, to minimize the effect from IO bound. All benchmark results are from custom invocations. The result was as follows.

| Case | Runtime |

|---|---|

| $$$t=100,n=100,m=100$$$ | $$$46\text{ms}$$$ |

| $$$t=1000,n=100,m=100$$$ | $$$217\text{ms}$$$ |

| $$$t=10000,n=100,m=100$$$ | $$$1358\text{ms}$$$ |

| $$$t=100,n=300,m=300$$$ | $$$171\text{ms}$$$ |

| $$$t=1,n=1000,m=1000$$$ | $$$92\text{ms}$$$ |

| $$$t=1,n=2000,m=2000$$$ | $$$187\text{ms}$$$ |

| $$$t=1,n=3000,m=3000$$$ | $$$359\text{ms}$$$ $$$^\dagger$$$ |

| $$$t=1,n=4000,m=4000$$$ | MLE $$$^\dagger$$$ |

$$$^\dagger$$$ The stack overflowed, so I had to move the array dp to static (global range).

May you have any question, please ask in comments! Thank you for reading this far.