TLDR почему то judge-система КФа работает гораздо медленнее oj.uz

Всем привет! Так как IOI приближается, я начал дорешку задачек с IOI. Думаю, вы слышали об IOI archive на codeforces.

Увы, (как минимум дважды) пришлось столкнуться со следующим: Один и тот же код при посылке на oj.uz и на codeforces набирает различное количество баллов. К удивлению, не в пользу КФа.

Как мне казалось, на серверах КФ код должен работать быстрее. На деле имеем следующее:

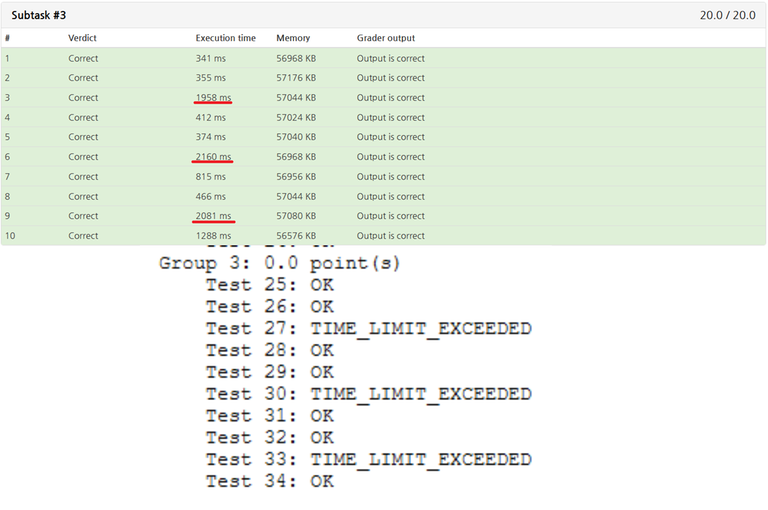

Задача IOI18_seats, TL на КФ 3 секунды, чего должно с лихвой хватать учитывая 2 секунды необходимые oj.uz'у дабы отработать.

Другой пример:

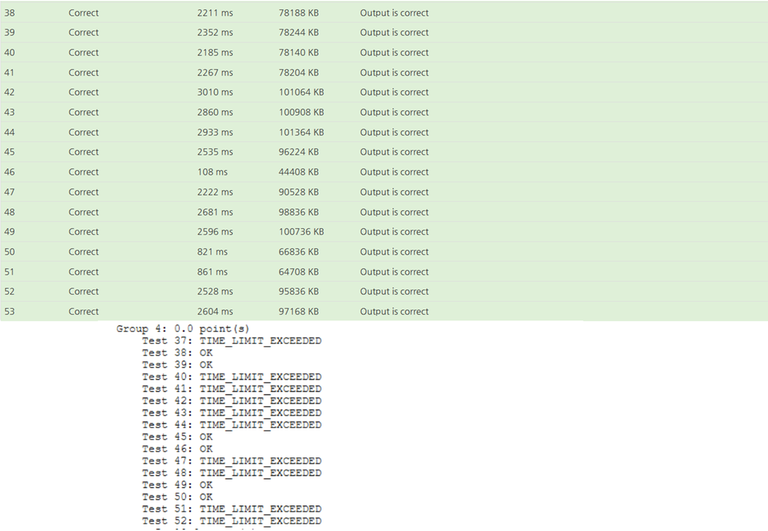

Задача IOI19_rectangles, вновь берёт ТЛ на кф, проходя тесты на oj.uz .

Понятно, что это нормально если неоптимальные решения могут падать/работать в притык, проблема в том, что на oj.uz они почему то спокойно работают, хотя (по-моему) тот должен быть медленнее?

Прошу прощения за пинг заранее, если это не входит в вашу ответственность, но PavelKunyavskiy, MikeMirzayanov, может это возможно как то исправить. Спасибо!

Upd. submission links:

IOI19_rectangles: codeforces , oj.uz

IOI18_seats: codeforces , oj.uz

Автокомментарий: текст был переведен пользователем TimDee (оригинальная версия, переведенная версия, сравнить).

Auto comment: topic has been updated by TimDee (previous revision, new revision, compare).

As I see time limits are different: 3s on cf and 4s-5s on oj.uz

Additionally, why are the standings open at the IOI-archive while a virtual contest is ongoing? The problem is not really about standings, but rather with the fact that codeforces shows the amount of people that solved each problem, which can spoil that some of them are easy/hard.

This annoyed me as well. I solved the scoreboard part by hiding non-official (=all) participants, and wrote a simple extension (firefox) that hides the number of accepted solutions for problems in the main page. I can share it here if you want, but I only now submitted it for approval in the extension workshop, so until it gets verified you'll have to open it manually every time you quit the browser.

That was fast! it got through automatically, here

I think at some point on IOI they added some statistics like "total number of points by all participants" in cms to be available during contest.

But the basic answer to this is it's just not implemented to hide it, as there was no request before, and it doesn't seam to be a priority.

Don't recall such thing (I participated last year). Or did you mean it was like that at some point in the past and has since been removed?

In any case, a real implementation that solves the issue would be helpful for others, but I'm not the one to say whether it's of high priority.

It was implemented and used in IOI 2018 Japan if I recall correctly. That system was not used again after that year (I remember there being discussions around it, and there wasn't a strong consensus among countries to make the change, so IOI returned to status-quo on this).

When I attended IOI 2019 as a participant it was implemented (but I don't know about the year before).

I am not sure whether we are talking about the same thing, but the "percentage of points per task" live stats during contest was removed for 2019. You can see it striken-through in the 2019 rules change with respect to the previous year https://ioi2019.az/en-content-14.html

Also the 2018 and 2019 GA minutes talk about these points (live stats) and the corresponding discussions. https://ioinformatics.org/page/general-assembly/5

From those ISC report minutes regarding the 2018 IOI Survey:

"Rule changes: For the live statistics, students and leaders had mixed feelings about keeping them for the next year (2019), but more people did not want to keep it for future IOIs, so we removed it. Answers varied wildly depending on the roles."

My bad, it was implemented at the national competition.

Okey, so my rememberings was outdated.

Still, on oj.uz it works in ≈2 seconds (at least, for Seats problem), that should be sufficient to pass 3S TL on codeforces?

Had a similar issue with IOI2013D1 art class, got different scores with the same submission (but only ~5 points more on oj.uz)

That's more intersting. I don't see reason why it can happen (except TLs). Can you send submission links?

79.75pts on codeforces and 84pts on oj.uz

Hm. Interesting. But correct based on number of tests passed.

I'm not really sure, but I think there was no test data in any reasonable format in archive. So maybe we converted it a bit differently.

I know I shouldn't write this while my vc is still going on, but I'm starting to lose my mind. after debugging my solution (0pts on IOI2013D2 game) for ~2 hours I gave in to my gut feeling and submitted it on oj.uz... instant AC. I submitted a bruteforce solution and it worked for the first subtask (expected), but my real solution still gets zero with every compiler on codeforces. can you please look into it? codeforces oj.uz

What is your verdict? (I mean, is it TLE?)

WA on all tests in the first two groups (after that it stopped testing, and gave me the partial score of 0, which made the evaluation almost instant) . Running the code locally on official test data produced correct results for every test I've tried.

Okay, that one is funny. The problem is you are not initializing lf and rg in your tree. Different compilers and OSes can lead to different results in that case. So, you have Runtime Error, which works on oj just because you are lucky. What I can recommend here is that if you have passing samples locally but not on judge — run them with address sanitizer or valgrind.

The trickier part is why you were receiving WA instead of runtime. This was caused by combination of three problems

I'm not sure what can I do here, but I'll think about how can I rewrite the interactor to avoid this. Or maybe we should fix testlib. Or just don't worry about cheeters and remove this strange stuff.

One more note: I got idea of making problems interactive for protection from official archives. And they are using testlib in interactors. And I have no idea on how cms handle priorities in different interactive cases.

Oh well that was just incredibly dumb of me... By:

you mean ls and rs in SegTreap, right? in my defense the WA+AC in oj.uz really threw me off (especially on the day after I read this blog), but it's still my problem... :(

Anyway, thanks for the help, and for the additional information!

Unfortunately, I don't have a reasonable answer to any of these questions, but some reasoning on why different things can happen.

First, life is more complex than there is a "fast judge" and a "slow judge." For example, oj.uz is probably using Linux, which can speed up IO a lot. Or maybe they have some more optimal grader. Also, different compiler versions, different cache sizes, etc. So it's never possible to setup fully equivalent mirrors. And to be honest, IOI style of tasks with tough time-limits and enormous inputs make this even worse.

Second, I didn't have any good guidance on how to set up time limits. I often didn't have solutions for all subtasks, only for the last ones. So time-limit can be strange or impossible to pass with some intended subtasks solutions. I was trying to have at least twice from the solution I have on full subtasks. But this can also be not good because it can be some over-optimized solution, which was not intended to be required. If someone has any ideas on how to setup it better, feel free to share them.

Still, thanks =)

My opinion about "what should TL be" might be too biased, so I'd wait to see if others have similar problems/request

P.s. I understand there's no such thing as slow or fast judge, still I thought there might be something like "judgement for codeforces groups is done on different servers from ones that judge 'main' codeforces submissions (which may be slower)".

[sorry for necroposting],

PavelKunyavskiy, another problem(s):

IOI2011 day2,

elephant: statement, subtask 3, it says N<=5000, it's 50000 in original problem, please fix this as it causes missunderstanding :D

also, images in statement are ordered in wrong order

also, once again TL issue:

oj.uz / codeforces

crocodiles: c++17 c++20 c++17, c++20 gives different scores, it could be UB, but I checked and code seems to be safe

Statements are fixed.

As for last problem, I have no idea. I asked codeforces admins to check. My only idea, is IO is significantly slower in codeforces C++20 compiler (MinGW always has problems).

Thank you!

PavelKunyavskiy, IOI2014D — subtask 10, a[i] should be <= 10^9, not 10^6

Fixed too. Please, if you have more, send them as one big batch, not one by one :)

I've pointed out some of the problems under "IOI archive" blog, but didn't get any feedback (didn't check if they were fixed either), so I'm reposting them here.

It seems I'm not getting notifications from it. Issues on https://github.com/kunyavskiy/ioi-on-codeforces would be more robust.

Fixed subtask dependency, failed to find extra 1, can you show exact place?

Seems to be fixed now :)

PavelKunyavskiy There is a problem with memory limit on IOI 2014 D1 P2, here it gives MLE but on Oj.uz it passes for a 100 points. Edit:Language same, code same, constraints same.

At least you should get some links to submissions, if you expect any investigation.

https://codeforces.com/group/32KGsXgiKA/contest/103767/submission/214189376 https://oj.uz/submission/785607

Well. Array T in this code is almost 256 megabytes large. While usage on oj.uz is much less. This can probably happen because of Windows vs Linux, as linux can avoid physical allocation of memory which was never touched. So looks like valid results to me.

Thank you!